𝐋𝐋𝐌𝐬 𝐀𝐫𝐞 𝐈𝐦𝐩𝐫𝐞𝐬𝐬𝐢𝐯𝐞. 𝐁𝐮𝐭 𝐀𝐫𝐞 𝐒𝐋𝐌𝐬 𝐌𝐨𝐫𝐞 𝐏𝐫𝐚𝐜𝐭𝐢𝐜𝐚𝐥 𝐟𝐨𝐫 𝐁𝐮𝐬𝐢𝐧𝐞𝐬𝐬?

Boosting Product Development

Machine Learning for CAE

Study of Modal Characteristics of a System using K-nearest neighbors: Machine Learning Approach

Introduction

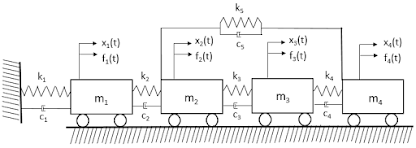

The study of modal characteristics is important to determine the natural frequencies of the system. In this study, a four DOF (degrees-of-freedom) system as shown in figure 1 is studied for the parameters tabulated in table 1. The mass, stiffness and damping matrices for the whole system are calculated and finally, the frequency response function is calculated to observe the resonant frequencies of the system.

A data set is prepared by modifying the mass and the parallel stiffness of the system for a different set of values and the respective first resonant frequencies are recorded. Latin hypercube sampling is used to optimally distribute the data points (mass & stiffness) in the design space, to prepare the data.

The data obtained is trained using KNN (k-nearest neighbors regression) by selecting an optimal value of k. The trained KNN is used to predict the resonant frequency of a new set of mass and stiffness. It was observed that the prediction is close to the actual value.

Modal Analysis

The dynamic equation of motion, global mass, stiffness and damping matrices for the 4 DOF system are derived in the following way.

|

| Figure 1: 4 DOF System |

|

DOF number |

Mass (kg) |

Stiffness (N/m) |

|

1 |

125 |

10e6 |

|

2 |

75 |

10.2e6 |

|

3 |

45 |

21e6 |

|

4 |

15 |

9.5e6 |

|

5 |

|

5e4 |

Proportion coefficient to mass matrix, a = 0.5

Proportion coefficient to stiffness matrix, b = 0.00001

The equations of motion for each mass are derived in the following way.

Writing the mass, stiffness and damping matrices according to the following relation.

|

| Figure 2 :Accelerance - Input at DOF4, Output at DO4 |

|

| Figure 3: Accelerance - Input at DOF2, Output at DO2 |

|

| Figure 4: Accelerance - Input at DOF2, Output at DO4 |

Machine Learning

LLMs Vs SLMs

𝐋𝐋𝐌𝐬 𝐀𝐫𝐞 𝐈𝐦𝐩𝐫𝐞𝐬𝐬𝐢𝐯𝐞. 𝐁𝐮𝐭 𝐀𝐫𝐞 𝐒𝐋𝐌𝐬 𝐌𝐨𝐫𝐞 𝐏𝐫𝐚𝐜𝐭𝐢𝐜𝐚𝐥 𝐟𝐨𝐫 𝐁𝐮𝐬𝐢𝐧𝐞𝐬𝐬? In the past few weeks, I...

-

Objective and Overview This study aims at predicting the maximum pressure in a hydrodynamic plane slider pad bearing using Machine Learni...

-

Introduction The study of modal characteristics is important to determine the natural frequencies of the system. In this study, a four DOF...

-

𝐋𝐋𝐌𝐬 𝐀𝐫𝐞 𝐈𝐦𝐩𝐫𝐞𝐬𝐬𝐢𝐯𝐞. 𝐁𝐮𝐭 𝐀𝐫𝐞 𝐒𝐋𝐌𝐬 𝐌𝐨𝐫𝐞 𝐏𝐫𝐚𝐜𝐭𝐢𝐜𝐚𝐥 𝐟𝐨𝐫 𝐁𝐮𝐬𝐢𝐧𝐞𝐬𝐬? In the past few weeks, I...

"Are Large Language Models (LLMs) truly the future of enterprise transformation, or can Small Language Models (SLMs) meet most needs more effectively for specific, tailored use cases?"

Here is my analysis so far (still a work in progress):

LLMs like GPT-4 and Claude 3.5 Sonnet contain 175 billion to over 1 trillion parameters requiring massive distributed computing infrastructure. Small language models, typically 1-20 billion parameters like Llama 3.2, Gemini Nano, sacrifice some general knowledge breadth for dramatic gains in efficiency, speed and cost-effectiveness.

Here's the interesting part:

For many enterprise scenarios, smaller models aren't a compromise—they're a strategic advantage. A fine-tuned 7B parameter model focused on a specific domain can outperform GPT-4 on specialized tasks, all while costing 10x to 50x less to operate.

(Source: https://lnkd.in/erjTAnWu)

Premium LLM APIs can cost $75 per million input tokens for GPT 4.5, while SLMs like Llama can operate at around $0.03 for the same.

Adding to this, LLMs often require GPU clusters worth hundreds of thousands of dollars, whereas SLMs can run efficiently on local machines under $10,000.

Most importantly, latency often matters more than the capability.

SLMs deliver 6-10 tokens per second on modest hardware making them ideal for real-time applications. LLMs, despite their power, typically require 5-30 seconds for complex responses and introduce variable latency due to distributed processing. For applications where users expect immediate feedback, this performance gap becomes a competitive disadvantage regardless of the model's theoretical capabilities.

So unless, there is a strong need for strategic analysis or multi-step reasoning, is there a real need for LLMs in enterprise applications?

Follow the blog for detailed analysis in the next post.